In the previous SPHERE news article focusing on the application of ontologies for digital twins of buildings, the importance of interoperability between data environments was stressed to reach a rich digital representation. In our philosophy, applications in the SPHERE ecosystem should always be replaceable to avoid vendor-lock in and to stimulate a best-of-breed approach. Such a situation can only be achieved when data can flow effortlessly and without data loss between different applications.

The EU Framework for Interoperability [1] can be applied as a mental structure to discuss the different facets surrounding the concept of interoperability. The framework distinguishes four layers of interoperability that all need proper attention, including:

- Legal: the alignment of legislation and the creation of contracts to assure interoperability;

- Organizational: business processes (workflows) and exchange requirements;

- Semantic: agreements on the meaning of data elements and relations, as well as data structures to represent them;

- Technical: interface specifications, communication protocols of applications and supporting infrastructure.

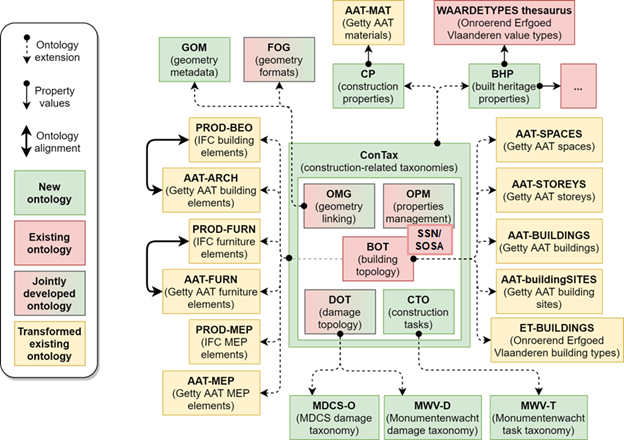

In our previous article, we focused on the concept of “ontology” to cover semantic interoperability, i.e., a human and machine understandable description of concepts, relations, attributes and datatypes. More specifically, several web ontologies were selected after a thorough review. They each implement the open W3C standards (RDF, RDFS and OWL) as well as best practices for ontology engineering and publishing. A distinction is made between core ontologies (e.g., BOT and SOSA/SSN) and extending Object Type Libraries (OTL’s) containing the bulk amount of domain expert knowledge (e.g., types of building components, types of sensors, measurements, etc.). Together, they form the SPHERE network of ontologies (Figure 1), i.e., a group of both loosely and explicitly coupled ontologies that are suitable for the SPHERE use cases, to describe building digital twins in the ideal amount of detail. Links between ontologies can be part of a core ontology or OTL, or they can be defined in so-called “ontology alignments” or dedicated ontologies.

Continuing, “Application Profiles” (AP) are a complementary component to ontologies that allow us to define a subset of the concepts in the ontology network for a specific data integration case. By again applying the corresponding W3C standard, i.e., SHACL, formally defined modeling constraints can be prepared in a standardized manner and used to validate exchanged datasets using generic tools.

On the other side of the spectrum, technical interoperability requires that applications that wish to exchange data implement a documented, shared and stable Application Programming Interface (API) and support a shared communication protocol. When applied in a Web context, a widespread type of web API is the so-called Representational State Transfer or REST API [3] applying the HTTP communication protocol together with JSON payloads. Documenting such an API for both humans and machines can be done easily using the OpenAPI Specification (OAS). While most, if not all software developers know how to implement and communicate with a REST API, only few know how to work with ontologies and application profiles based on the previously mentioned W3C Linked Data standards.

To bridge the gap between semantic and technical interoperability, several options have been considered.

A first approach is to create a REST API that is loosely based on the ontology concepts.

The downside here is that an explicit connection to domain concepts defined in the ontologies is missing, which introduces ambiguity and disallows the validation of datasets against the agreed upon application profiles.

A second approach is to move the widely supported and customizable REST API architecture aside, and instead rely on full Linked Data APIs implementing protocols such as SPARQL, Triple Pattern Fragments or Linked Data Platform.

Implementing such a full Linked Data API, however, requires a large amount of experience with the different Linked Data specifications. Many SPHERE partners have indicated that this creates, at this point in time, too much additional effort.

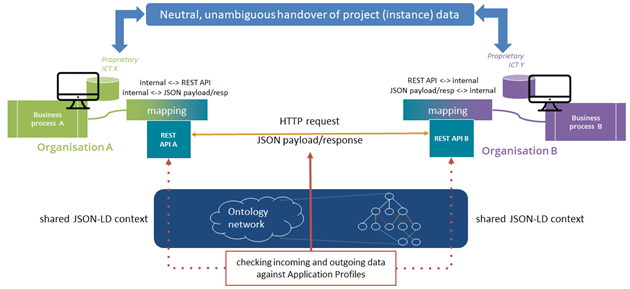

Consequently, a third option (Figure 2) is selected so that application developers can still use their technical REST API infrastructure while shared definitions (in the ontologies) and exchange agreements (in the application profiles) can be used to provide the semantic interoperability layer.

These goals can easily be reached by applying the standardized JSON serialization of Linked Data, i.e., JSON-LD. By providing a JSON-LD context as a lightweight mapping, any valid JSON file emitted or received by a REST API can be interpreted as both JSON and Linked Data. The first is used by the developers when designing their REST API (technical interoperability) while the second is used to explicitly connect JSON keys and values to concepts defined in the ontologies which can in turn be used for semantic validation of exchanged JSON data (semantic interoperability). Applications that know how to deal with Linked Data, can benefit directly from the fact that the exchanged data is also available in a Linked Data format without additional effort. Our presented approach can therefore be seen as a steppingstone for application developers to reach the full potential of Linked Data technology at their own innovation pace.

At this point, creating JSON-LD contexts and SHACL shape constraints is a relatively artisanal process as the specifications are still relatively new. When the first integrations are finished, we plan to optimize the generation and maintenance of these artefacts. To assure reusability of these artefacts beyond the duration of the SPHERE project, the Building Digital Twin Association (BDTA) has pledged to maintain them and host them online together with the related documentation.

Concluding, a layered approach with different concerns and levels of standardization is required to tackle the interoperability issues that exist in a modern ecosystem IT landscape. This article discussed in particular the technology components needed to reach semantic and technical interoperability in a flexible ecosystem of construction-related applications, i.e., ontologies, application profiles, mappings and APIs.

Written by Mathias Bonduel, from Neanex Technologies

References

[1] Directorate-General for Informatics (European Commission). New European Interoperability Framework – Promoting seamless services and data flows for European public administrations. Tech. rep. 2017, p. 48. doi: 10.2799/78681.

[2] M. Bonduel, ‘A Framework for a Linked Data-based Heritage BIM’, Ph.D. dissertation, KU Leuven, Ghent, 2021. Available: https://lirias.kuleuven.be/handle/123456789/674476

[3] R.T. Fielding, ‘Architectural Styles and the Design of Network-based Software Architectures’, Ph.D. dissertation, University of California, Irvine, 2000. Available: https://www.ics.uci.edu/~fielding/pubs/dissertation/top.htm